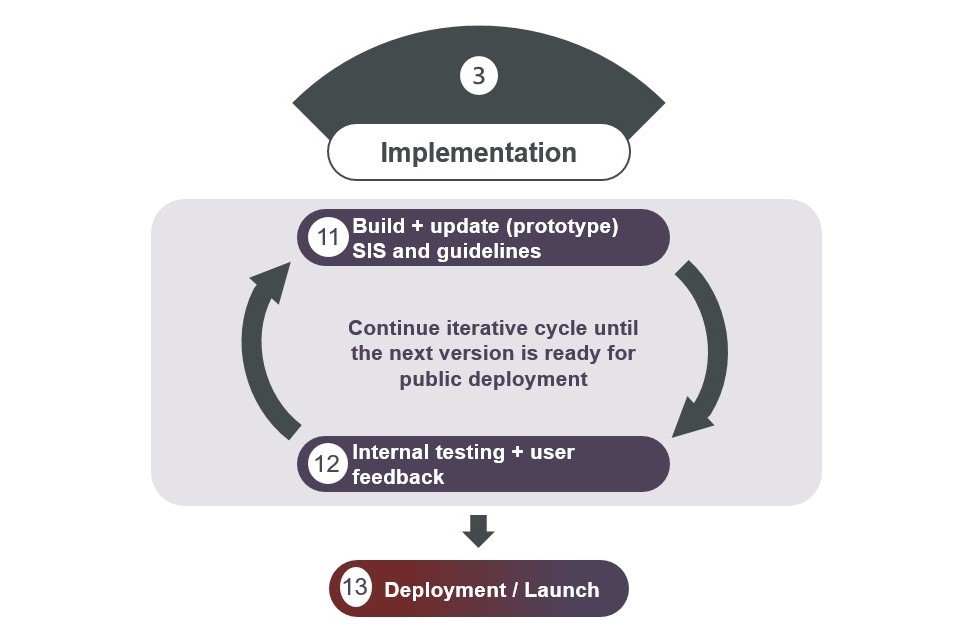

Framework: Implementation Phase

The implementation phase is composed of three components and intends to take the user through an iterative cycle for the build of the system. It primarily focuses on the capacity and skills of the operators, ensuring the SIS is functional and that it meets the user's needs.

Objective:

To build an early, simplified version of a system for testing concepts, design features and functionalities before a more complete product is delivered. Your data inventory, findings from the needs assessment, data strategy and idealised system design will be needed to inform decisions made about the build of the system in this phase.

Key definitions:

- Prototype: a tangible model that helps to visualise the proposed solution to stakeholders for concept validation with early review, feedback and for better refinement. It is a stripped-down version of a comprehensive product and can come in low-fidelity and/or high-fidelity.

Activities:

The following list of activities for developing a SIS prototype include areas that are needed for the minimum prototype version to be user tested, as well as areas that can be optional for the prototype version or considered for a later and more improve version. The exact dimensions to be included in the initial prototype will be determined by the SIS implementers in each country.

-

Build SIS (prototype)

- Review the system architectural design (Component 8). As you go through this iterative cycle and collect feedback, you may need to revise design plans and revisit earlier components.

- It is recommended to start small (Minimum Valuable Product) and continue to build on this prototype as you go through the iterative process.

- Between each iteration, request feedback from target groups who will use the system.

- Extend the prototype each time until you are ready to launch for broader audience. The iteration and improvement continues.

-

User Support and Documentation

-

Help Center:

- Comprehensive user guides, FAQs, and troubleshooting articles.

- Interactive tutorials, chatbots, and video demonstrations (optional).

-

Customer Support:

- Support tickets, live chat, and email support for user assistance.

- Feedback system to collect user suggestions and improve the system.

-

Help Center:

Objective:

Gather feedback from diverse stakeholders and users to improve the SIS so that it is fully functional and meets needs.Key definitions:

Beta testing: the final opportunity for users to test the SIS before it is launched to the public for any outstanding issues to be addressed (although a functional feedback loop should be established thereafter for continuous improvement).Activities:

-

Preparation Phase

-

Define Objectives:

- Identify what aspects of the SIS need testing (e.g., usability, functionality, performance).

- Set clear goals for what the testing should achieve.

-

Recruit Test Participants:

- It is recommended to start with an internal test group.

- For further testing, select a diverse group of users representative of the target audience (e.g., farmers, soil scientists, agronomists in public, private and NGO sectors).

- Include users with varying levels of technical expertise.

-

Create Test Scenarios:

- Develop realistic scenarios and tasks that users are likely to perform on the SIS.

- Ensure scenarios cover all major functionalities of the system.

-

Define Objectives:

-

Testing Environment

-

Setup:

- Prepare devices and software required for testing (e.g., computers, tablets, soil sensors).

-

Documentation:

- Provide users with any necessary documentation or instructions for using the prototype.

- Prepare data collection tools (e.g., survey forms, screen recording software).

-

Setup:

-

Conducting the Tests

-

Introduction:

- Brief participants on the purpose of the test and what they will be doing.

-

Task Execution:

- Ask participants to perform specific tasks based on the test scenarios.

- Observe users as they interact with the system, noting any difficulties or confusion.

- Encourage users to think aloud, explaining their thought process as they use the system.

-

Feedback Collection:

- Conduct post-task interviews to gather detailed feedback on user experience.

- Seek feedback for improvement and additional functions.

- Use questionnaires to collect quantitative data on user satisfaction, ease of use, and overall impressions.

-

Introduction:

-

Types of Testing

-

Usability Testing:

- Focus on the ease of use, navigation, and user interface design.

- Measure task completion time, error rates, and user satisfaction.

-

Functional Testing:

- Verify that all features work as intended.

- Test data input, processing, and output functionalities.

-

Performance Testing:

- Assess the system's response time, stability, and resource usage under different loads.

- Simulate high-usage scenarios to identify potential bottlenecks.

-

Security Testing:

- Test user authentication, data encryption, and access control mechanisms.

- Identify vulnerabilities that could be exploited.

-

Usability Testing:

-

Data Analysis

-

Qualitative Analysis:

- Review notes and recordings from the test sessions.

- Identify common issues and areas of confusion.

-

Quantitative Analysis:

- Analyse survey results and task performance metrics.

- Look for patterns in user behaviour and feedback.

-

Qualitative Analysis:

-

Reporting and Iteration

-

Report Findings:

- Compile a detailed report of the testing results, highlighting key issues and user feedback.

- Include recommendations for improvements based on the findings.

-

Prioritise Issues:

- Categorize issues by severity and impact on user experience.

- Prioritize fixes and enhancements based on user needs and system goals.

-

Iterate and Improve:

- Review the required changes against budget.

- Make necessary changes to the SIS prototype based on testing feedback.

- Plan for subsequent rounds of testing to ensure continuous improvement.

-

Report Findings:

-

Final Validation

-

Beta Testing:

- Release the improved SIS to a larger group of users for beta testing.

- Collect additional feedback and make final adjustments before the official launch.

-

Validation Testing:

- Conduct final testing to ensure all issues have been resolved and the system meets user requirements.

- Seek relevant approvals to confirm that the SIS is ready for deployment.

-

Beta Testing:

Objective:

Launch a fully functional SIS to the public with an equipped team and a feedback mechanism for continuous improvement.Key definitions:

Soft launch: the SIS is launched for a smaller audience to use at first.Activities:

-

Develop a comprehensive plan for the SIS deployment

- How will you structure the initial soft launch?

- Do your plans include migration, risk management and rollback (if required)?

-

Have you developed a marketing and communications plan for the launch?

- Will you publish press releases, newsletters and/or social media posts directly or through regional fora?

-

Implement the training and documentation plan

- How will you structure a series of live demos and Q&A?

- Have you pointed new users where to go to learn how to use the SIS?

- How will you ensure continuous capacity building on usage of the system?

-

Monitor Feedback

- How will you collect and manage incident reports?

- Is the feedback mechanism live?

- What is the strategy to actively seek out feedback and address it?

- Based on your feedback, it is nescesarry to go back to previous components?